AI Agent Context Feature

Company

Onlook (YC W25)

Role

Lead Product Designer & Design Engineer

Timeline

4 weeks

Skills

UX Research, Competitor Research, Prototyping

Tools

Cursor, Onlook, Figma

Overview

Designed, prototyped, and shipped a context-adding feature for Onlook's Design-IDE, enabling users to effortlessly inject contextual information into AI prompts using an intuitive @-mention interface. This closed the gap between user intent and AI comprehension, aiming to significantly boost response accuracy and integrating seamlessly with designer workflows.

Challenge

Enable users to add contextual information to AI agent prompts for more accurate, relevant results in Onlook's Design-IDE AI Chat interface.

Without purpose-built context integration, users struggled to manually reference code files, design assets, and components when prompting the AI agent, resulting in less accurate outputs and broken user flow. The opportunity was to create an intuitive feature which empowers users to frictionlessly add the right context to AI agent chats while optimizing both human usability and flow and AI agent task performance.

Problem Discovery

User Pain Point Validation:

- Conducted extensive product testing to understand the current state friction of adding context to prompts

- Interviewed and observed users to understand the most commonly referenced contextual items and their process for adding them

- Surveyed Onlook’s Discord community to understand current state pain points related to adding context to AI Chats and to understand which context users find most valuable

Key Findings:

- There is strong demand for an improved way to add context to agent prompts

- Users most frequently add component names from the code panel (e.g., “header” and brand styles (e.g., colors, fonts) from the left design panel

- Users wish to reference other pages in their agent prompts

- Manual retrieval of context from Onlook’s codebase and brand styles panel disrupted user flow

- Users preferred to iteratively refine designs via the agent prompts, requiring convenient access to the most recently used context

Current State Example of Adding a Common User Prompt:

Competitive Research:

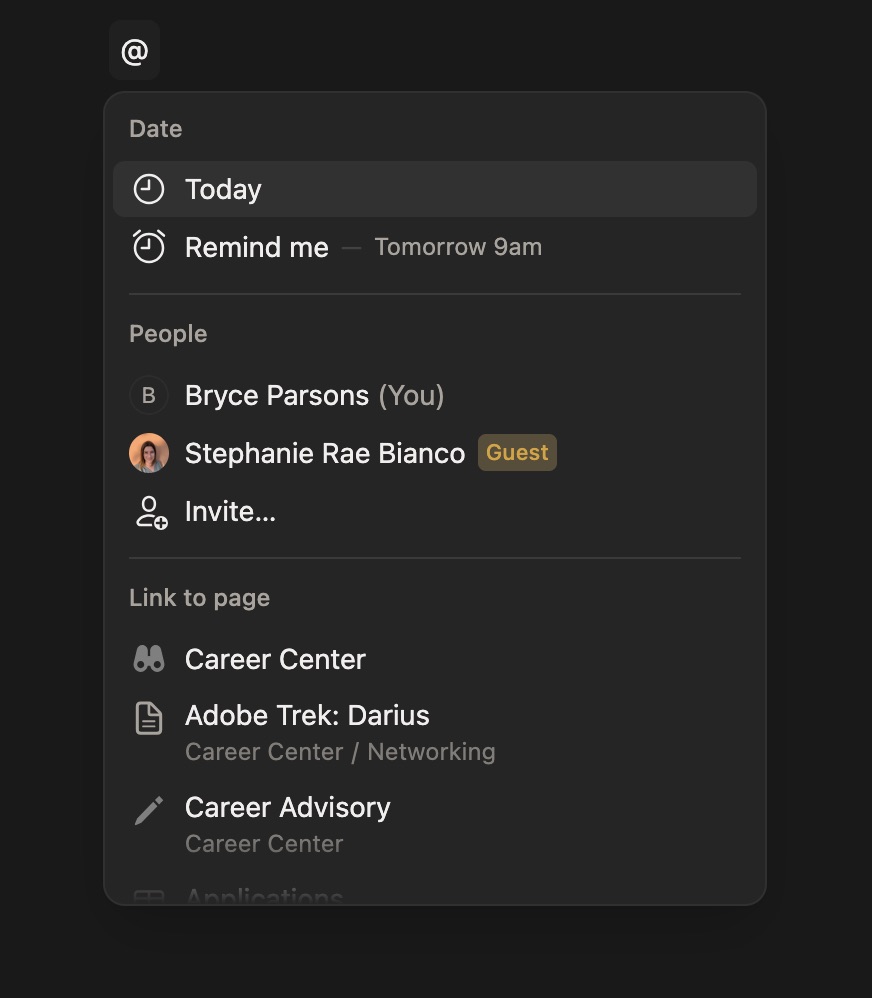

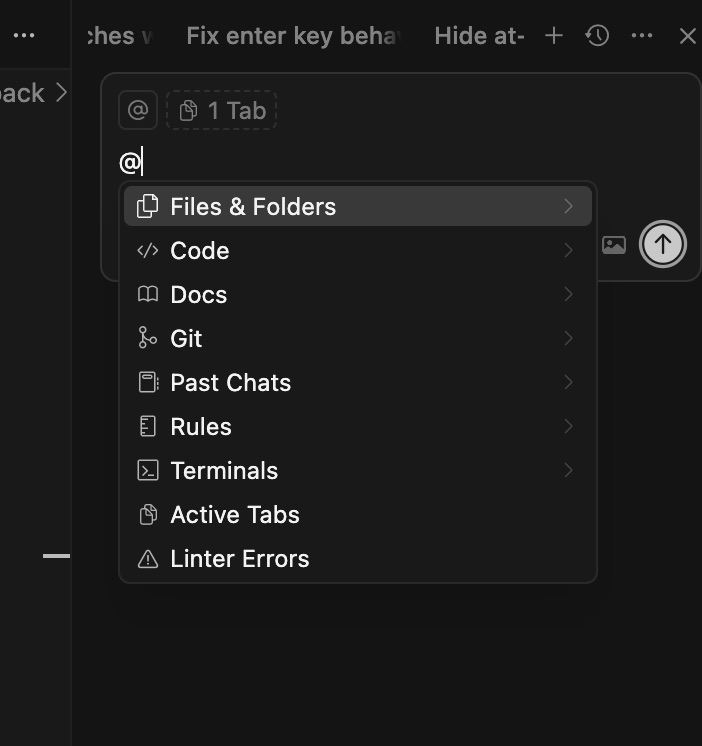

- Analyzed context adding features across common AI first tools (Cursor, Dia) and collaboration platforms (Slack, Notion)

- Identified @ symbol as industry standard for contextual referencing

- Confirmed opportunity for competitive differentiation against Lovable, Figma, V0, and Framer – all products offer similar experiences, however do not use an @-context feature.

Competitor Examples:

Notion:

Cursor:

Solution Development

Design Principles:

- Prioritize user flow with keyboard-first interaction

- Leverage familiar @ pattern for intuitive adoption

- Abstract complexity for the users experience while maintaining rich contextual options to support the agent’s performance

Feature Specifications:

- @-Menu Categories: Recents (3 most recent), Code (components, files, pages), Design Elements (brand colors, typography)

- Interaction Design: Keyboard and mouse controls supporting seamless flow and user preference

- Search Functionality: Fuzzy search to accommodate imperfect name recall

Prototyping & Implementation

Technical Implementation and Iterative Development Process:

- Figma Mockups: Rapid initial concept exploration and iteration

- Onlook Prototype: Built an interactive prototype using Onlook’s own product

- User Testing: Validated with co-founders and key users

- Development:

- Utilized perplexity to generate a PRD optimized for a coding agent based off of my prototype, findings, and requirements.

- Componentized the prototype in Onlook and exported the prototypes code base

- Prompt-engineered cursor (primarily using gpt-5 and claude-4-sonnet) to implement the prototype feature in my branch of Onlook’s code base

- Iterated and refined the build using a local host version of the product

Design Prototype Built in Onlook

Final Solution Built in Cursor

Created PR and worked with open source contributors to complete the feature implementation

Results

AI Agent Context Adding Issue Resolved:

Users now can seamlessly integrate context into AI-agent-prompts without interrupting their flow, resulting in an improved user experience, productivity, and increased agent performance.

Expected Outcomes:

- Enhanced AI response accuracy through improved contextual prompting

- Streamlined user workflow eliminating manual context retrieval

- Increased user satisfaction and retention through better AI-agent interaction experience

- Sustained competitive advantage and differentiation against AI-design-tooling competitors

Success Metrics Framework:

- AI-agent evaluation performance improvements (e.g., response correctness, code quality, visual fidelity).

- Workflow efficiency gains (e.g., reduced prompt iteration cycles, reduced time on task, increased number of unique coding tasks completed per session)

- Overall user retention and satisfaction scores

Expect to see this feature in one of Onlook's next major releases this fall